An year ago i was set up my own home router based on PC and faced with the necessity of prioritizing network traffic. First i drown small diagram that displayed traffic that i had and priorities that i was will to give to that traffic.

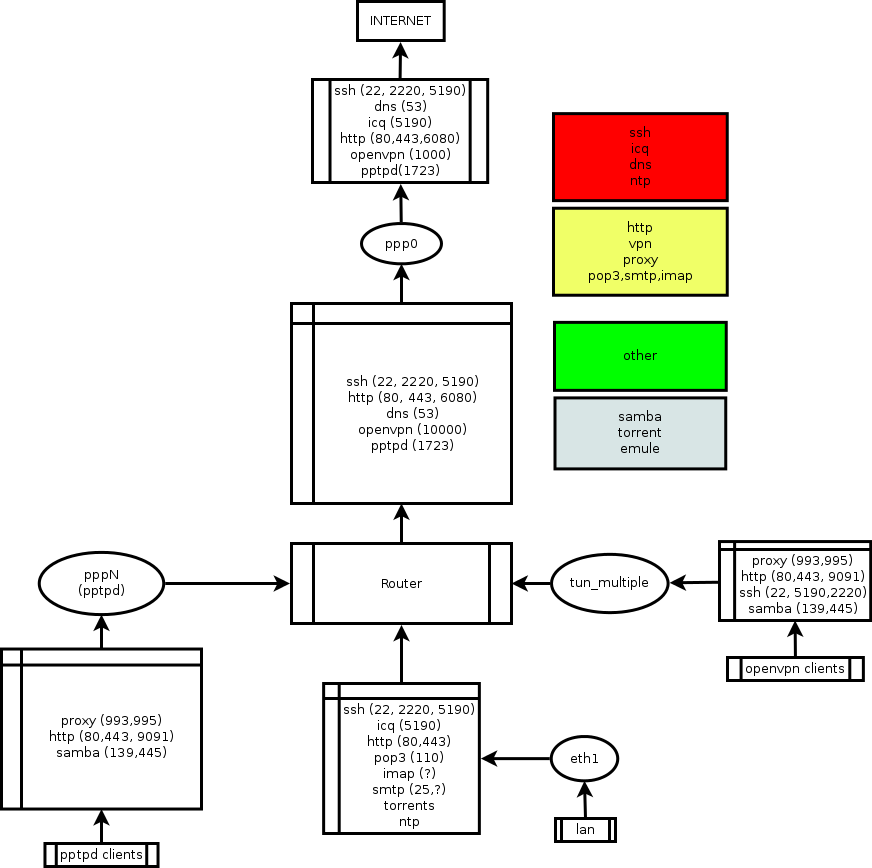

I placed diagram here, but i must warn you, i made this diagram for myself, so it may look ugly for other. I had one channel to internet and few VPN tunnels for personal use. On the diagram i specified sources of possible traffic and assigned different priorities for diffirent types of traffic (from “realtime” — red, till ‘does not care’ – ‘grey’). This diagram helped me to distinguish different types of traffic and to planned how i can construct rules for them.

I placed diagram here, but i must warn you, i made this diagram for myself, so it may look ugly for other. I had one channel to internet and few VPN tunnels for personal use. On the diagram i specified sources of possible traffic and assigned different priorities for diffirent types of traffic (from “realtime” — red, till ‘does not care’ – ‘grey’). This diagram helped me to distinguish different types of traffic and to planned how i can construct rules for them.

After that i started to learn how to shape my traffic. First i found that i can do it only by ‘tc’ from iproute package, i found this method is too complex if i will wanted to remember what tc rules do in future. Also at this stage i select HTB discipline for shaping in future.

Next i tried HTB.init script and found that metod too ugly.

I started to search more ‘high-level’ method and found tcng, it is just a brilliant. It is special language interpreter, that allow you to write how you want to shape your traffic in ‘script’ manner. First, i was worried, because last release of tcng was in 2004 year, but later i found, that tcng work perfect.

Before i started to learn tcng i do not know, that you can use tc in very different manner. For example you can use only tc for prioritizing traffic by port and protocol, or by bits in field of the packet. So, let’s look at simple example:

dev ppp0 { egress { class ( <$interactive> ) if tcp_dport == 22; class ( <$medium> ) if tcp_dport == 80 || tcp_dport == 443; class ( <$bulk> ) if tcp_dport == 21; class ( <$default> ) ; htb () { $interactive = class ( rate 64kbps, ceil 128kbps ) { sfq ( perturb 10s ); } $medium = class ( rate 128kbps, ceil 128kbps ) { sfq ( perturb 10s ); } $bulk = class ( rate 256kbps, ceil 512kbps ) { sfq ( perturb 10s ); } $default = class ( rate 256kbps, ceil 512kbps ) { sfq ( perturb 10s ); } } } } |

I think, even if you do not know anything about tc and about traffic shaping at all, when you will look on this example, you can easily suggest what’s happening there. I writed before, that i found clear tc syntaxis too complex, tcng convert rules writed on his own language to tc syntaxis, let’s look how it will look after tcng ‘compile’ this rules:

tc qdisc add dev ppp0 handle 1:0 root dsmark indices 8 default_index 0 tc qdisc add dev ppp0 handle 2:0 parent 1:0 htb tc class add dev ppp0 parent 2:0 classid 2:1 htb rate 8000bps ceil 16000bps tc qdisc add dev ppp0 handle 3:0 parent 2:1 sfq perturb 10 tc class add dev ppp0 parent 2:0 classid 2:2 htb rate 16000bps ceil 16000bps tc qdisc add dev ppp0 handle 4:0 parent 2:2 sfq perturb 10 tc class add dev ppp0 parent 2:0 classid 2:3 htb rate 32000bps ceil 64000bps tc qdisc add dev ppp0 handle 5:0 parent 2:3 sfq perturb 10 tc class add dev ppp0 parent 2:0 classid 2:4 htb rate 32000bps ceil 64000bps tc qdisc add dev ppp0 handle 6:0 parent 2:4 sfq perturb 10 tc filter add dev ppp0 parent 2:0 protocol all prio 1 tcindex mask 0x7 shift 0 tc filter add dev ppp0 parent 2:0 protocol all prio 1 handle 4 tcindex classid 2:4 tc filter add dev ppp0 parent 2:0 protocol all prio 1 handle 3 tcindex classid 2:3 tc filter add dev ppp0 parent 2:0 protocol all prio 1 handle 2 tcindex classid 2:2 tc filter add dev ppp0 parent 2:0 protocol all prio 1 handle 1 tcindex classid 2:1 tc filter add dev ppp0 parent 1:0 protocol all prio 1 handle 1:0:0 u32 divisor 1 tc filter add dev ppp0 parent 1:0 protocol all prio 1 u32 match u8 0x6 0xff at 9 offset at 0 mask 0f00 shift 6 eat link 1:0:0 tc filter add dev ppp0 parent 1:0 protocol all prio 1 handle 1:0:1 u32 ht 1:0:0 match u16 0x16 0xffff at 2 classid 1:1 tc filter add dev ppp0 parent 1:0 protocol all prio 1 handle 2:0:0 u32 divisor 1 tc filter add dev ppp0 parent 1:0 protocol all prio 1 u32 match u8 0x6 0xff at 9 offset at 0 mask 0f00 shift 6 eat link 2:0:0 tc filter add dev ppp0 parent 1:0 protocol all prio 1 handle 2:0:1 u32 ht 2:0:0 match u16 0x50 0xffff at 2 classid 1:2 tc filter add dev ppp0 parent 1:0 protocol all prio 1 handle 3:0:0 u32 divisor 1 tc filter add dev ppp0 parent 1:0 protocol all prio 1 u32 match u8 0x6 0xff at 9 offset at 0 mask 0f00 shift 6 eat link 3:0:0 tc filter add dev ppp0 parent 1:0 protocol all prio 1 handle 3:0:1 u32 ht 3:0:0 match u16 0x1bb 0xffff at 2 classid 1:2 tc filter add dev ppp0 parent 1:0 protocol all prio 1 handle 4:0:0 u32 divisor 1 tc filter add dev ppp0 parent 1:0 protocol all prio 1 u32 match u8 0x6 0xff at 9 offset at 0 mask 0f00 shift 6 eat link 4:0:0 tc filter add dev ppp0 parent 1:0 protocol all prio 1 handle 4:0:1 u32 ht 4:0:0 match u16 0x15 0xffff at 2 classid 1:3 |

Huh now it is not so simple as before.

OK, let’s talk about few things that you need to understand if you do not want to read theory about traffic shaping and about tc.

First, all that i will told, valid only to network and transport level of tcp/ip stack, it can be valid for other level/situation, but you can found exceptions.

Second, you can not control directly the speed at which the remote host will send you the data, you can control speed which you will send the data or speed which you will recieve the data. I.e. if you have 10 Mbit channel, and remote host sending something to you at speed 10 Mbit/s, your channel will be flooded by remote host.

If we talk about tcp sockets, then you can indirectly control speed, you can recieve packets at 5Mbit/s and tcp protocol must throttle speed which remote host sending the data. If we talk about UDP, you can not do anything on network/transport level, exept shutting down of your interface. =)

So, why i told about that, in most cases when you shaping traffic in linux, you shaping outbound traffic. It is effective, exept situation when inbound bandwidth flooded which udp or which special forged packets ( if your under tcp syn flood attack, for example). When i wrote rules, i wrote it for outound traffic. You can try to control inbound traffic by IFB devices, but it is another story.

All rules and disciplines directly or indirectly bound to on of the network interface

Third important thing, in linux exists few diffirent disciplines for traffic shaping (you need to read tc manual, if you want to know more), i prefer HTB for my purposes. When you use HTB, you must classify traffic, all traffic that will not be classified, will go to the default class.

Huh, i read again what i wrote and now thinking that it look not so simple if you does not read theory about traffic shaping in linux, anyway if you does not understand what about i talking, you can read manual for tc and LARTC or you free to experiment and look what will happen. =)

After i learned tcng, i wrote complete rules for shaping traffic on ppp0, but when i tried to compile rules, i noticed that tcng use amount of cpu and did not response, i thinked that it is a bug, but after few minutes i got near 30 000 lines of tc commands. It happened, because i began using tcng without understanding how they compile their rules. I give a simple example what happened:

#include "ports.tc" dev ppp0 { egress { /* all of our classification happens here */ class ( <$test> ) if tcp_sport > 1024 && tcp_dport < 1024 || udp_sport > 1024 && udp_dport > 1024; class ( <$default> ); /* all of our queues are added here */ htb () { $test = class ( rate 64kbps, ceil 128kbps ) { sfq ( perturb 10s ); } $default = class ( rate 256kbps, ceil 512kbps ) { sfq ( perturb 10s ); } } } } |

This is just example, what will happened if you will try to compile it? Let’s see:

$ tcng /tmp/tcngtest|wc -l 732 |

You will can seen by yourself how looks result. In short when tcng compile rules, they can not use logical operations like destination port > 1024, instead of that they use bit maching by offset in package, as result you will got hundred rules that will containt bits, bit masks, offsets etc. I do not have my old rules for demonstration how exactly i had near 30k lines, but i will show how i solve this problem by iptables and tcng.

Because i can not use complex rules of packet classification in tcng, i used iptables to mark packets by same rules and simple rules in tcng for shaping by marks. You can learn about mark in iptables manual, as i remember, iptables can mark packets in network stack of OS, this mark visible locally, but will invisible for other hosts.

I slightly modified classification that you can see in diagram, i added additional classes and now traffic divided into realtime, high priority, middle pirority low latency, mid priority, low priority and default.

Also i want to explain rule for low priority traffic, first task is mark as low priority p2p networks, there is two way, use l7 inspection or use dumb rules based on network/transport layer. L7 inspection give low false positive error rates, but slow. Dumb rules give high false positive error rates, but fast. For l7 inspection you can use opendpi, but i prefer second solution. I classify all traffic from unprivileged ports to all unprivileged as low priority traffic. Anyway, if you have something important that send data from unprivileged to unprivileged port, you can specify additional rules for this traffic (if your know ports).

Ok, let’s look on tcng rules, i created rules only for my internet connection, i connected over pppoe, so i placed next script in /etc/ppp/ip-up.d/qos:

#!/bin/sh if [ ! -z $PPP_IFACE ] then if [ -f /etc/tcng/${CALL_FILE}.tc ] then /bin/sed "s/PPP_IFACE/${PPP_IFACE}/" /etc/tcng/${CALL_FILE}.tc|/usr/bin/tcng -r|/bin/bash fi fi |

In /etc/tcng/dsl-provider.tc i put my rules, every time when i ‘call’ dsl-provider this script executing. Name of PPP interface can vary, so instead of interface name i put ‘PPP_IFACE’ into rules. When ppp connection established, script change marker to actual interface name, compile rules and execute it.

There is my rules:

#include "fields.tc" #include "ports.tc" dev PPP_IFACE { egress { class( <$realtime> ) if meta_nfmark == 0x10; class( <$high> ) if meta_nfmark == 0x15; class( <$mid_lowlatency> ) if meta_nfmark == 0x20; class( <$mid_noname> ) if meta_nfmark == 0x25; class( <$lowp> ) if meta_nfmark == 0x30; class( <$default> ) if 1; //default htb(quantum 1500B) { class( rate 100Mbps, ceil 100Mbps ) { class( prio 1, rate 2Mbps, ceil 100Mbps ) { $realtime = class( prio 1, rate 1Mbps, ceil 100Mbps ) { sfq; } $high = class( prio 2, rate 1Mbps, ceil 100Mbps ) { sfq; } }; class( prio 2, rate 2Mbps, ceil 100Mbps, quantum 1250B ) { $mid_lowlatency = class( prio 1, rate 1Mbps, ceil 100Mbps ) { sfq; } $mid_noname = class( prio 2, rate 1Mbps, ceil 100Mbps ) { sfq; } }; $lowp = class( prio 8, rate 1Mbps, ceil 100Mbps ) { sfq; }; $default = class( prio 3, rate 1Mbps, ceil 100Mbps ) { sfq; }; } } } } |

Pay attention, default class have higher priority than low priority. There i have different class names, but i think it is not a big problem for understanding idea of rules. Next we will talk about packets marking. if you want to mark packet in iptables, you need to use `-J MARK –set-mark=MARK_VALUE`, if you need to match marked packets in tcng, you must use `meta_nfmark` directive. As i remember, you need to place mark rules into `mangle` table `PREROUTING` chain, otherwise this will not work with `tc`. I create `QOS` chain into `mangle` table and add `-j QOS` rule into `PREROUTING` chain, there is part of iptables-save dump:

-A POSTROUTING -o ppp0 -j QOS -A QOS -p icmp -m mark --mark 0x0 -m comment --comment "MARK all icmp traffic as realtime" -j MARK --set-mark 0x10/0xffffffff -A QOS -p tcp -m mark --mark 0x0 -m tcp --dport 53 -m comment --comment "MARK tcp DNS queries as realtime" -j MARK --set-mark 0x10/0xffffffff -A QOS -p udp -m mark --mark 0x0 -m udp --dport 53 -m comment --comment "MARK udp DNS queries as realtime" -j MARK --set-mark 0x10/0xffffffff -A QOS -p udp -m mark --mark 0x0 -m udp --dport 123 -m comment --comment "MARK udp NTP queries as realtime" -j MARK --set-mark 0x10/0xffffffff -A QOS -p tcp -m mark --mark 0x0 -m tcp --dport 123 -m comment --comment "MARK tcp NTP queries as realtime" -j MARK --set-mark 0x10/0xffffffff -A QOS -m mark --mark 0x0 -m length --length 0:128 -m comment --comment "MARK all small packets as realtime" -j MARK --set-mark 0x10/0xffffffff -A QOS -p tcp -m mark --mark 0x0 -m multiport --dports 22,5190 -m length --length 0:1500 -m comment --comment "MARK all small SSH and ICQ packets as high priority" -j MARK --set-mark 0x15/0xffffffff -A QOS -p tcp -m mark --mark 0x0 -m multiport --dports 80,443 -m comment --comment "MARK all HTTP traffic as medium interactive priority" -j MARK --set-mark 0x20/0xffffffff -A QOS -p tcp -m mark --mark 0x0 -m multiport --dports 143,220,993 -m comment --comment "MARK IMAP traffic as medium interactive priority" -j MARK --set-mark 0x20/0xffffffff -A QOS -p tcp -m mark --mark 0x0 -m multiport --dports 110,995 -m comment --comment "MARK POP3 traffic as medium priority" -j MARK --set-mark 0x25/0xffffffff -A QOS -p tcp -m mark --mark 0x0 -m multiport --dports 25,465 -m comment --comment "MARK SMTP traffic as medium priority" -j MARK --set-mark 0x25/0xffffffff -A QOS -p tcp -m mark --mark 0x0 -m tcp --sport 10000 -m comment --comment "MARK outgoing OpenVPN as medium priority" -j MARK --set-mark 0x25/0xffffffff -A QOS -p tcp -m mark --mark 0x0 -m tcp --sport 1723 -m comment --comment "MARK outgoing pptp as medium priority" -j MARK --set-mark 0x25/0xffffffff -A QOS -p tcp -m mark --mark 0x0 -m multiport --sports 80,6080 -m comment --comment "MARK outgoing HTTP as medium priority" -j MARK --set-mark 0x25/0xffffffff -A QOS -p tcp -m mark --mark 0x0 -m multiport --dports 1024:65535 -m multiport --sports 1024:65535 -m comment --comment "MARK low priority TCP traffic" -j MARK --set-mark 0x50/0xffffffff -A QOS -p udp -m mark --mark 0x0 -m multiport --dports 1024:65535 -m multiport --sports 1024:65535 -m comment --comment "MARK low priority UDP traffic" -j MARK --set-mark 0x50/0xffffffff |

I wrote rules with comments, so it is easy to understand. Also, now i noticed, that i must add this rules from script that executed when pppoe connection established, because i does not know name of ppp interface before connection will be established.

In conclusion i want to say, i tried just to show that tcng is very useful tool, it will help you to write clear rules for traffic shaping. Anyway, it can not substitute knowleges about how work traffic shaping in linux. I highly reccomend to read LARTC and manual for `tc` before you going to use `tcng`.